Welcome to our comprehensive guide on machine learning algorithms, focusing specifically on implementing neural networks from scratch. In today’s rapidly evolving technological landscape, understanding the fundamentals of neural networks is crucial for anyone interested in artificial intelligence and data science. Neural networks, inspired by the human brain, are at the forefront of modern machine learning applications, enabling computers to learn from data and make predictions with unprecedented accuracy.

In this blog, we will delve into the intricacies of neural networks, starting from the basics and gradually progressing to more advanced concepts. Whether you’re a beginner looking to grasp the foundational concepts or an experienced developer aiming to refine your skills, this guide will provide you with practical insights and hands-on examples. By the end, you’ll not only understand how neural networks work but also gain the confidence to build and implement your own from scratch.

8 Machine Learning Algorithms: Implementing Neural Networks from Scratch

1. Define the Neural Network Architecture

To implement a neural network from scratch, start by defining its architecture. This includes determining the number of layers the network will have and the number of neurons in each layer. The architecture choice depends on the complexity of the problem you’re addressing. For instance, a simple feedforward neural network might consist of an input layer, one or more hidden layers, and an output layer. Each layer contributes to learning different features from the data, with the output layer providing the final predictions or classifications.

2. Initialize Weights and Biases

Initialization of weights and biases is crucial as they dictate how information flows through the network during training. Typically, weights are initialized randomly, ensuring that neurons in different layers can learn different representations of the data. Biases are initialized to small values to avoid issues like vanishing gradients. Proper initialization sets the stage for effective learning and convergence of the neural network.

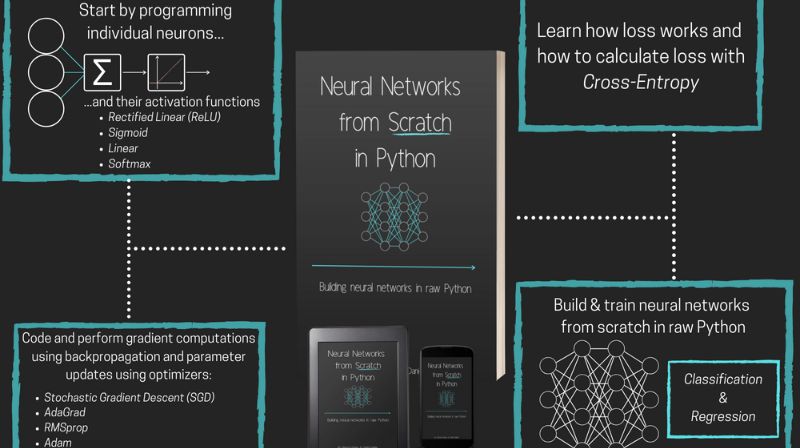

3. Choose Activation Functions

Activation functions introduce non-linearity into the neural network, enabling it to learn complex patterns. Common activation functions include sigmoid, tanh, and ReLU (Rectified Linear Unit). The choice of activation function depends on the nature of the problem and the characteristics of the data. ReLU, for example, is widely used in hidden layers due to its simplicity and effectiveness in mitigating the vanishing gradient problem.

4. Implement Forward Propagation

Forward propagation involves passing the input data through the neural network to compute predictions. Each layer performs a weighted sum of its inputs, applies the activation function, and passes the result to the next layer. The output layer generates predictions that are compared to the actual labels during training. This step forms the basis of how neural networks process information and make initial predictions.

5. Perform Backpropagation

Backpropagation is the cornerstone of training neural networks. It calculates the gradient of the loss function with respect to each weight and bias in the network. This gradient is then used to update the network’s parameters during optimization, typically using gradient descent or its variants. Backpropagation involves propagating the error backward through the network, adjusting weights to minimize prediction errors iteratively.

6. Choose a Loss Function

The choice of a loss function depends on the type of problem you’re solving. Common loss functions include mean squared error (MSE) for regression tasks and cross-entropy loss for classification tasks. The loss function quantifies how well the network’s predictions align with the actual labels. During training, the goal is to minimize this loss function to improve the model’s accuracy and predictive power.

7. Optimize Hyperparameters

Hyperparameters such as learning rate, batch size, and regularization parameters play a crucial role in training neural networks effectively. Learning rate controls the step size during parameter updates, while batch size determines the number of samples processed before updating weights. Regularization techniques like L1 and L2 regularization help prevent overfitting by penalizing large weights. Finding optimal hyperparameter values often involves experimentation and tuning based on the specific dataset and problem domain.

8. Evaluate and Fine-Tune the Model

Once trained, evaluate the neural network’s performance using validation or test datasets. Metrics such as accuracy, precision, recall, and F1 score provide insights into the model’s effectiveness. Fine-tuning involves adjusting hyperparameters or exploring different network architectures to improve performance further. Iterative refinement based on evaluation results helps create a robust neural network model capable of generalizing well to unseen data.

Conclusion

In conclusion, mastering the implementation of neural networks from scratch is a rewarding endeavor that opens doors to countless possibilities in the realm of artificial intelligence. Throughout this guide, we’ve covered essential concepts such as neuron activation, forward and backward propagation, and optimization techniques like gradient descent. Armed with this knowledge, you now have the foundation to explore more complex neural network architectures and tackle real-world problems with confidence.

Remember, the field of machine learning is constantly evolving, with new algorithms and methodologies emerging regularly. As you continue your journey, stay curious, experiment with different approaches, and stay updated with the latest advancements. Whether you’re developing applications in healthcare, finance, or robotics, neural networks will continue to play a pivotal role in shaping the future of technology.

FAQs

What are neural networks and why are they important in machine learning?

Neural networks are computational models inspired by the human brain’s structure and function. They excel at learning complex patterns from data, making them essential for tasks like image recognition, natural language processing, and predictive analytics in machine learning.

How do neural networks learn?

Neural networks learn by adjusting weights and biases during training based on the error between predicted and actual outputs. This process, known as backpropagation, fine-tunes the network’s parameters to improve its predictions over time.

What are the steps to implement a neural network from scratch?

Implementing a neural network involves defining the architecture (number of layers, neurons per layer), initializing weights and biases, performing forward propagation to compute outputs, calculating loss, conducting backward propagation to update weights using gradient descent, and iterating until convergence.

What programming languages are commonly used for implementing neural networks?

Python is widely preferred for implementing neural networks due to its simplicity, extensive libraries (like TensorFlow and PyTorch), and strong community support. Other languages like R and Julia also have frameworks for neural network implementation, but Python remains dominant in the field.